SQR-085

USDF EFD storage requirements#

Abstract

We have analyzed the EFD data collected over the past few years and estimated the expected storage growth for the EFD at the USDF during survey operations. The peak average throughput observed is approximately 600 GB per week. Assuming this rate remains constant, we project an annual storage growth of about 30 TB. As of now, the EFD at the USDF contains approximately 30 TB of data, and we have recently expanded its storage capacity to 100 TB, which should be sufficient through the end of 2026. We recommend that the USDF begin planning for a migration from InfluxDB v1 Enterprise to InfluxDB v3 Enterprise. The newer version supports object store for persistence and is generally a better fit for Kubernetes-based deployments. Additionally, we believe the information presented in this document can support the T&S software team in reviewing the CSCs’ implementations, with a particular focus on optimizing data throughput for the MTM1M3 CSC. Finally, we recommend regularly monitoring EFD data rates to ensure storage capacity remains adequate and to inform the USDF of any infrastructure upgrades needed well in advance.

Introduction#

Rubin Observatory generates a large amount of engineering data, which is stored in the Engineering and Facilities Database (EFD). The EFD data is used for real-time monitoring of the observatory systems using Chronograf dashboards, among other tools.

The data is collected at the Summit and replicated to USDF for long-term storage using Sasquatch.

In the Summit environment, the EFD data is retained for a nominal period of 30 days. In the USDF environment, however, the data must be retained for the project’s lifetime, which imposes significant storage requirements for the database.

Data throughput from the CSCs#

Each CSC produces data in the form of telemetry, events, and commands published to Kafka topics in Sasquatch and then stored in InfluxDB, a time-series database.

The data throughput from the CSCs at a given time is a function of the number of CSCs enabled, the size of the messages produced to each topic, and the frequency at which these messages are produced.

The assumption that the CSCs are always enabled and produce data at a constant rate is not accurate and would lead to an overestimation of the EFD storage requirements, especially considering several years of data collection. In reality, even during the survey operations CSCs will transition between enable and disable states based on the operational needs the observatory.

A better approach for estimating the EFD storage requirements is based on the actual data collection.

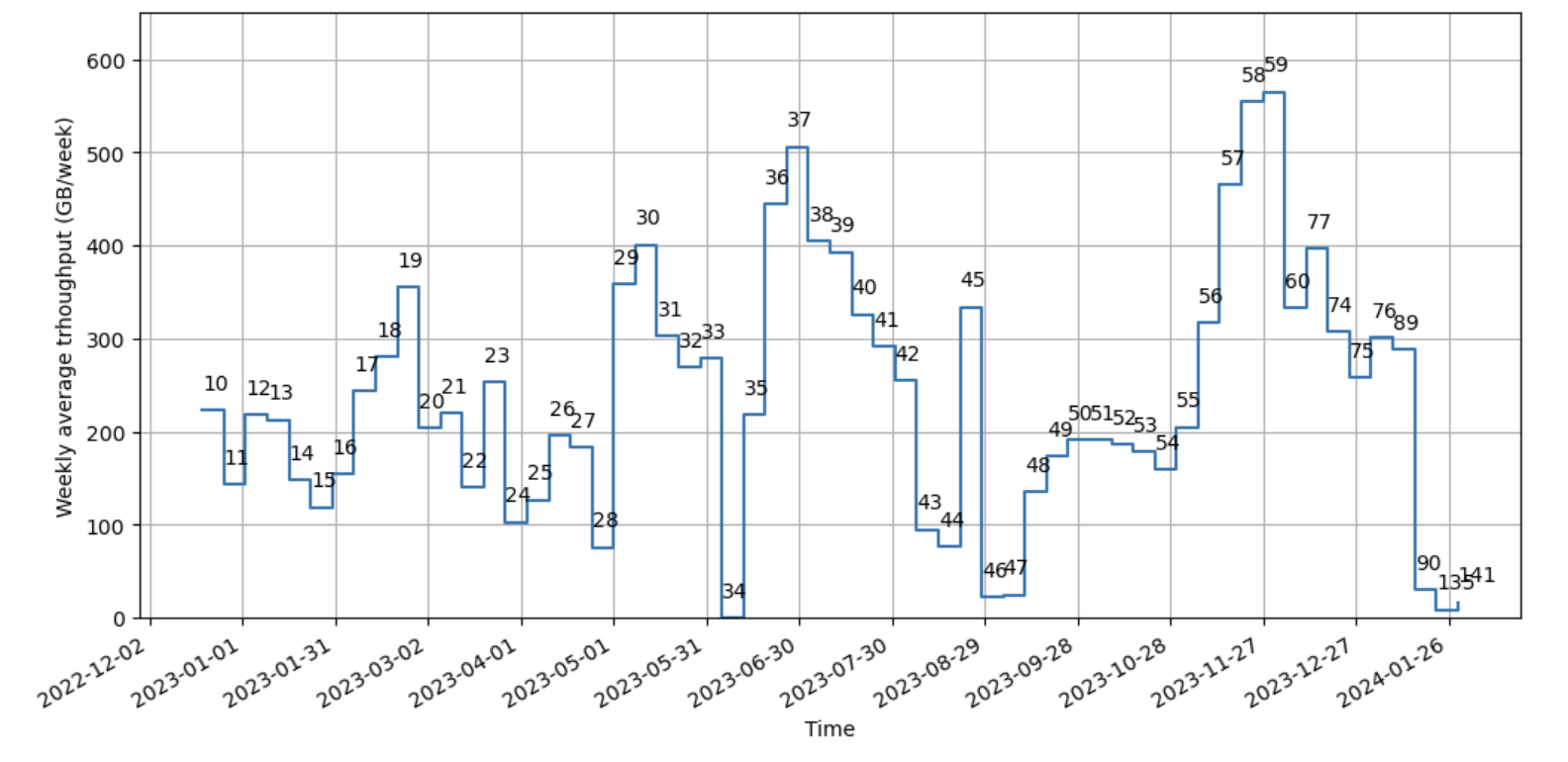

Weekly average throughput#

A weekly average data throughput smooths out daily fluctuations in the data and aligns well with Summit activities that are planned on a weekly basis.

Because the EFD data is organized in shards partitioned by time, and the duration of a shard in the EFD is seven days, the size of the EFD shards over time is a direct measure of the weekly average throughput.

The size of the shards can be determined using the influx_inspect disk utility.

$ influx_inspect report-disk /var/lib/influxdb/

In 2023 we had test campaigns for the M1M3, TMA, M2 and AuxTel runs that were used to measure the data throughput to the EFD and helped to estimate the storage requirements at USDF for 2024 and 2025.

Figure 1 shows the size of the EFD shards for data collected over the past few years. Comparing the amount of data collected during these campaigns with the data collected in late 2024 with ComCam on-sky and early 2025 with LSSTCam on-sky confirms this approach. We expect a nearly constant data throughput ~600 GB/week during survey operations.

The current size of the EFD database at USDF is 31TB.

Fig. 1 Size of EFD shards for data collected over time.#

The largest shard in Figure 1 corresponds the the first week of July with 578 GB.

Using this shard reference, 578 GB/week or 30TB/year is probably close to the total throughput we will have during survey operations. This is also supported by the fact that M1M3 is the largest contributor to the EFD data throughput as discussed in the next section.

Relative throughput and the case of M1M3#

On 2023-12, during a test campaign, we measured the relative throughput from the CSCs, which was determined from the size of individual topics retained in Kafka for 72h. This date was chosen as it coincided with a period of stable operations and high data activity, providing a representative sample for analysis.

The tables listing the sizes of telemetry and event topics from that period are presented in Appendix A. Below is a summary of the relative throughput per CSC based on the measurements.

CSC |

Size (MB) |

% of total |

|---|---|---|

ATCamera |

109 |

0.03% |

ATDome |

8 |

0.00% |

ATHexapod |

3 |

0.00% |

ATMCS |

388 |

0.09% |

ATPneumatics |

10 |

0.00% |

ATPtg |

1486 |

0.35% |

CCCamera |

304 |

0.07% |

DIMM |

82 |

0.02% |

ESS |

7357 |

1.75% |

HVAC |

70 |

0.02% |

MTAirCompressor |

212 |

0.05% |

MTHexapod |

305 |

0.07% |

MTM1M3 |

353700 |

84.20% |

MTM1M3TS |

5113 |

1.22% |

MTM2 |

8270 |

1.97% |

MTMount |

33453 |

7.96% |

MTPtg |

2238 |

0.53% |

MTVMS |

6183 |

1.47% |

Scheduler |

787 |

0.19% |

Total |

420077 |

100.00% |

This table shows that the MTM1M3 and MTMount CSCs produce the highest telemetry throughtput in the EFD, particularly MTM1M3 is responsible for 84% of the total telemetry throughput.

CSC |

Size (MB) |

% of total |

|---|---|---|

ATAOS |

45 |

0.15% |

ATCamera |

47 |

0.15% |

ATDome |

45 |

0.15% |

ATDomeTrajectory |

49 |

0.16% |

ATHeaderService |

68 |

0.22% |

ATHexapod |

47 |

0.15% |

ATMCS |

77 |

0.25% |

ATMonochromator |

49 |

0.16% |

ATOODS |

45 |

0.15% |

ATPneumatics |

82 |

0.27% |

ATPtg |

45 |

0.15% |

ATSpectrograph |

49 |

0.16% |

ATWhiteLight |

48 |

0.16% |

CCCamera |

80 |

0.26% |

CCHeaderService |

49 |

0.16% |

CCOODS |

66 |

0.22% |

DIMM |

92 |

0.30% |

Electrometer |

99 |

0.32% |

ESS |

897 |

2.91% |

FiberSpectrograph |

77 |

0.25% |

GCHeaderService |

204 |

0.66% |

GenericCamera |

147 |

0.48% |

GIS |

1413 |

4.59% |

HVAC |

69 |

0.22% |

LaserTracker |

49 |

0.16% |

MTAirCompressor |

100 |

0.33% |

MTAOS |

45 |

0.14% |

MTDome |

45 |

0.15% |

MTDomeTrajectory |

49 |

0.16% |

MTHexapod |

96 |

0.31% |

MTM1M3 |

25323 |

82.22% |

MTM1M3TS |

89 |

0.29% |

MTM2 |

361 |

1.17% |

MTMount |

121 |

0.39% |

MTPtg |

45 |

0.15% |

MTRotator |

47 |

0.15% |

MTVMS |

70 |

0.23% |

OCPS |

92 |

0.30% |

PMD |

45 |

0.15% |

Scheduler |

95 |

0.31% |

ScriptQueue |

97 |

0.32% |

Test |

46 |

0.15% |

Watcher |

46 |

0.15% |

WeatherForecast |

49 |

0.16% |

Total |

30800 |

100.00% |

Events represent a smaller fraction, 7% of the total troughput. The MTM1M3 and MTMount CSCs produce the highest throughput event data in the EFD, followed the MTM2 CSC.

This analysis helped us to identify a bug in the MTM1M3 CSC that was producing messages to the MTM1M3.logevent_forceActuatorWarning event topic at a higher rate than expected (DM-41835). This finding suggests further improvements in the MTM1M3 CSC implementation to reduce the current throughput observed (see also Appendix A).

The data throughput from the CSCs is also shown in Sankey diagrams representing the data flow from each CSC to the EFD for telemetry and events topics. In these visualizations, the line connecting the CSC to its topic represents the relative throughput among the CSCs. The thicker the line, the higher the throughput.

EFD storage growth estimation#

The EFD storage requirements are estimated based on the EFD data collection (see Figure 1), current project schedule and also considering the InfluxDB Enterprise cluster setup, the license model, and safety margins.

In the current InfluxDB Enterprise setup at USDF, we use 2x8-core nodes with 100 TB of local SSD storage each and a replication factor of 2 for redundancy.

The InfluxDB Enterprise license model is based on the number of cores used in the cluster. The current production license allows for 16 cores. If needed, we can add two more data nodes to the cluster in a 4x4-core nodes setup to increase the storage capacity while keeping the same replicator factor.

A commonly advised practice is to include a safety margin of around 20% to 50% to account for unexpected data growth.

Table 1 shows the estimated storage growth for the EFD data at the USDF, considering an increase of 30 TB/year during survey operations, a replication factor of 2, and a safety margin of 15 TB.

Year |

RF |

Total storage (TB) |

Main drivers |

|---|---|---|---|

2024 |

2 |

60 |

M1M3+TMA+M2+AuxTel |

2025 |

2 |

100 |

Survey Operations |

2026 |

2 |

160 |

Survey Operations |

2035 |

2 |

700-1000 |

Survey Operations |

Recommendations#

We recommend that the USDF begin planning for a migration from InfluxDB v1 Enterprise to InfluxDB v3 Enterprise. The newer version supports object store for persistence and is generally a better fit for Kubernetes-based deployments.

Additionally, we believe the information presented in this document can support the T&S software team in reviewing the CSCs’ implementations, with a particular focus on optimizing data throughput for the MTM1M3 CSC. To reduce the long-term storage requirements for the EFD, we recommend that the Summit software teams review and optimize the CSCs data throughput. This can be achieved by reducing the frequency at which the messages are produced and by reducing the size of the messages produced for each topic if possible. A reduction factor of X in the total CSC throughput reduces the storage requirements for the EFD by 2X for a replication factor of 2 or 4X if a replication factor of 4 is used for the InfluxDB Enterprise cluster in the future.

Finally, we recommend monitoring the EFD data throughput and the storage available on the InfluxDB Enterprise cluster on a regular basis and reevaluate the conclusions presented in this document to ensure that the storage requirements for the EFD are adequate for the data being collected and to inform USDF of any infrastructure upgrades needed well in advance.

Appendix A#

Size of telemetry and event topics stored in Kafka from 2023-12-04 only topics with size greater than 1MB are listed.

CSC |

Telemetry Topic |

Size(MB) |

|---|---|---|

MTM1M3 |

MTM1M3.forceActuatorData |

70423 |

MTM1M3 |

MTM1M3.appliedBalanceForces |

50851 |

MTM1M3 |

MTM1M3.appliedElevationForces |

48092 |

MTM1M3 |

MTM1M3.appliedAzimuthForces |

46533 |

MTM1M3 |

MTM1M3.appliedThermalForces |

46460 |

MTM1M3 |

MTM1M3.appliedForces |

21783 |

MTM1M3 |

MTM1M3.appliedCylinderForces |

18216 |

MTM1M3 |

MTM1M3.pidData |

11668 |

MTM1M3 |

MTM1M3.hardpointMonitorData |

9044 |

MTM1M3 |

MTM1M3.hardpointActuatorData |

7736 |

MTM1M3 |

MTM1M3.imsData |

4762 |

MTM1M3 |

MTM1M3.accelerometerData |

4369 |

MTM1M3 |

MTM1M3.powerSupplyData |

4177 |

MTM1M3TS |

MTM1M3TS.thermalData |

3643 |

MTMount |

MTMount.encoder |

3570 |

MTM1M3 |

MTM1M3.outerLoopData |

3418 |

MTM1M3 |

MTM1M3.inclinometerData |

3339 |

MTVMS |

MTVMS.data |

3216 |

MTMount |

MTMount.topEndChiller |

3162 |

ESS |

ESS.accelerometer |

2991 |

MTVMS |

MTVMS.psd |

2893 |

MTM1M3 |

MTM1M3.gyroData |

2828 |

MTMount |

MTMount.azimuthDrives |

2346 |

MTMount |

MTMount.elevationDrives |

2074 |

MTMount |

MTMount.balancing |

2066 |

ESS |

ESS.spectrumAnalyzer |

1831 |

MTMount |

MTMount.deployablePlatforms |

1801 |

MTMount |

MTMount.mirrorCoverLocks |

1771 |

MTMount |

MTMount.mirrorCover |

1771 |

MTMount |

MTMount.lockingPins |

1649 |

MTMount |

MTMount.cameraCableWrap |

1616 |

MTMount |

MTMount.elevation |

1560 |

MTMount |

MTMount.azimuth |

1556 |

MTMount |

MTMount.azimuthCableWrap |

1496 |

MTMount |

MTMount.mainCabinetThermal |

1482 |

MTM2 |

MTM2.displacementSensors |

1417 |

ESS |

ESS.accelerometerPSD |

1296 |

ATPtg |

ATPtg.mountPositions |

1150 |

MTM2 |

MTM2.ilcData |

956 |

MTM2 |

MTM2.forceErrorTangent |

918 |

MTM1M3TS |

MTM1M3TS.glycolLoopTemperature |

806 |

Scheduler |

Scheduler.observatoryState |

787 |

MTM2 |

MTM2.forceBalance |

760 |

MTM2 |

MTM2.positionIMS |

760 |

MTMount |

MTMount.cooling |

759 |

MTMount |

MTMount.azimuthDrivesThermal |

696 |

MTM2 |

MTM2.powerStatus |

692 |

MTM2 |

MTM2.powerStatusRaw |

692 |

MTMount |

MTMount.mainPowerSupply |

686 |

MTPtg |

MTPtg.mountPosition |

678 |

MTPtg |

MTPtg.currentTargetStatus |

663 |

MTM2 |

MTM2.netForcesTotal |

654 |

MTMount |

MTMount.safetySystem |

637 |

MTM1M3TS |

MTM1M3TS.mixingValve |

609 |

MTM2 |

MTM2.inclinometerAngleTma |

587 |

ESS |

ESS.temperature |

541 |

MTPtg |

MTPtg.mountStatus |

522 |

MTMount |

MTMount.dynaleneCooling |

484 |

MTMount |

MTMount.elevationDrivesThermal |

453 |

MTMount |

MTMount.auxiliaryCabinetsThermal |

409 |

MTM2 |

MTM2.netMomentsTotal |

374 |

MTMount |

MTMount.generalPurposeGlycolWater |

350 |

MTMount |

MTMount.compressedAir |

349 |

MTPtg |

MTPtg.timeAndDate |

330 |

MTMount |

MTMount.cabinet0101Thermal |

320 |

ATPtg |

ATPtg.timeAndDate |

316 |

MTMount |

MTMount.oilSupplySystem |

314 |

MTM2 |

MTM2.axialForce |

296 |

MTAirCompressor |

MTAirCompressor.analogData |

212 |

ESS |

ESS.dewPoint |

203 |

ESS |

ESS.relativeHumidity |

203 |

ESS |

ESS.pressure |

143 |

MTHexapod |

MTHexapod.actuators |

110 |

MTHexapod |

MTHexapod.application |

107 |

CCCamera |

CCCamera.daq_monitor_Reb_Trending |

90 |

MTHexapod |

MTHexapod.electrical |

88 |

DIMM |

DIMM.status |

82 |

CCCamera |

CCCamera.rebpower_Reb |

78 |

MTVMS |

MTVMS.miscellaneous |

74 |

MTMount |

MTMount.telemetryClientHeartbeat |

73 |

ESS |

ESS.electricFieldStrength |

68 |

ATMCS |

ATMCS.trajectory |

68 |

ESS |

ESS.lightningStrikeStatus |

66 |

ATMCS |

ATMCS.measuredMotorVelocity |

51 |

ATMCS |

ATMCS.measuredTorque |

51 |

ATMCS |

ATMCS.mount_Nasmyth_Encoders |

49 |

MTM2 |

MTM2.axialEncoderPositions |

47 |

ATMCS |

ATMCS.mount_AzEl_Encoders |

46 |

MTM1M3TS |

MTM1M3TS.glycolPump |

44 |

ATMCS |

ATMCS.torqueDemand |

43 |

ATMCS |

ATMCS.azEl_mountMotorEncoders |

41 |

ATMCS |

ATMCS.nasymth_m3_mountMotorEncoders |

35 |

ATCamera |

ATCamera.focal_plane_Reb |

33 |

MTPtg |

MTPtg.skyEnvironment |

27 |

MTM2 |

MTM2.axialActuatorSteps |

25 |

MTM2 |

MTM2.position |

22 |

CCCamera |

CCCamera.quadbox_PDU_24VC |

19 |

MTPtg |

MTPtg.namedAzEl |

19 |

MTM2 |

MTM2.tangentActuatorSteps |

16 |

MTM2 |

MTM2.temperature |

16 |

CCCamera |

CCCamera.rebpower_Rebps |

15 |

ATCamera |

ATCamera.daq_monitor_Reb_Trending |

14 |

MTM2 |

MTM2.tangentForce |

14 |

ESS |

ESS.airFlow |

13 |

MTM2 |

MTM2.tangentEncoderPositions |

13 |

CCCamera |

CCCamera.quadbox_PDU_48V |

12 |

CCCamera |

CCCamera.vacuum_Cold1 |

12 |

CCCamera |

CCCamera.vacuum_Cold2 |

12 |

CCCamera |

CCCamera.vacuum_Cryo |

12 |

ATPtg |

ATPtg.currentTargetStatus |

12 |

MTM2 |

MTM2.zenithAngle |

11 |

ATCamera |

ATCamera.focal_plane_Ccd |

11 |

ATCamera |

ATCamera.power |

11 |

ATCamera |

ATCamera.vacuum |

11 |

MTM1M3TS |

MTM1M3TS.flowMeter |

10 |

CCCamera |

CCCamera.quadbox_BFR |

10 |

CCCamera |

CCCamera.quadbox_PDU_24VD |

10 |

CCCamera |

CCCamera.daq_monitor_Store |

9 |

ATPtg |

ATPtg.mountStatus |

9 |

CCCamera |

CCCamera.focal_plane_RebTotalPower |

9 |

CCCamera |

CCCamera.quadbox_PDU_5V |

9 |

ATDome |

ATDome.position |

8 |

ATCamera |

ATCamera.daq_monitor_Sum_Trending |

6 |

CCCamera |

CCCamera.daq_monitor_Sum_Trending |

6 |

ATCamera |

ATCamera.focal_plane_RebsAverageTemp6 |

6 |

ATCamera |

ATCamera.focal_plane_RebTotalPower |

6 |

ATCamera |

ATCamera.daq_monitor_Store |

5 |

ATCamera |

ATCamera.bonn_shutter_Device |

5 |

ATMCS |

ATMCS.ackcmd |

5 |

ATPneumatics |

ATPneumatics.ackcmd |

4 |

HVAC |

HVAC.dynaleneP05 |

4 |

ATHexapod |

ATHexapod.positionStatus |

3 |

HVAC |

HVAC.chiller01P01 |

2 |

HVAC |

HVAC.chiller02P01 |

2 |

HVAC |

HVAC.chiller03P01 |

2 |

HVAC |

HVAC.crack01P02 |

2 |

HVAC |

HVAC.crack02P02 |

2 |

HVAC |

HVAC.manejadoraSblancaP04 |

2 |

HVAC |

HVAC.manejadoraSlimpiaP04 |

2 |

HVAC |

HVAC.manejadoraLower01P05 |

2 |

HVAC |

HVAC.manejadoraLower02P05 |

2 |

HVAC |

HVAC.manejadoraLower03P05 |

2 |

HVAC |

HVAC.manejadoraLower04P05 |

2 |

HVAC |

HVAC.fancoil01P02 |

2 |

HVAC |

HVAC.fancoil02P02 |

2 |

HVAC |

HVAC.fancoil03P02 |

2 |

HVAC |

HVAC.fancoil04P02 |

2 |

HVAC |

HVAC.fancoil05P02 |

2 |

HVAC |

HVAC.fancoil06P02 |

2 |

HVAC |

HVAC.fancoil07P02 |

2 |

HVAC |

HVAC.fancoil08P02 |

2 |

HVAC |

HVAC.fancoil09P02 |

2 |

HVAC |

HVAC.fancoil10P02 |

2 |

HVAC |

HVAC.fancoil11P02 |

2 |

HVAC |

HVAC.fancoil12P02 |

2 |

ATPneumatics |

ATPneumatics.loadCell |

2 |

ATPneumatics |

ATPneumatics.m1AirPressure |

2 |

ATPneumatics |

ATPneumatics.m2AirPressure |

2 |

ATPneumatics |

ATPneumatics.mainAirSourcePressure |

2 |

ESS |

ESS.airTurbulence |

1 |

HVAC |

HVAC.generalP01 |

1 |

HVAC |

HVAC.valvulaP01 |

1 |

HVAC |

HVAC.vex03LowerP04 |

1 |

HVAC |

HVAC.vex04CargaP04 |

1 |

HVAC |

HVAC.vea01P01 |

1 |

HVAC |

HVAC.vea01P05 |

1 |

HVAC |

HVAC.vea08P05 |

1 |

HVAC |

HVAC.vea09P05 |

1 |

HVAC |

HVAC.vea10P05 |

1 |

HVAC |

HVAC.vea11P05 |

1 |

HVAC |

HVAC.vea12P05 |

1 |

HVAC |

HVAC.vea13P05 |

1 |

HVAC |

HVAC.vea14P05 |

1 |

HVAC |

HVAC.vea15P05 |

1 |

HVAC |

HVAC.vea16P05 |

1 |

HVAC |

HVAC.vea17P05 |

1 |

HVAC |

HVAC.vec01P01 |

1 |

HVAC |

HVAC.vin01P01 |

1 |

HVAC |

HVAC.bombaAguaFriaP01 |

1 |

CSC |

Event Topic |

Size(MB) |

|---|---|---|

TM1M3 |

MTM1M3.logevent_forceActuatorWarning |

22555 |

TM1M3 |

MTM1M3.logevent_interlockStatus |

1487 |

GIS |

GIS.logevent_systemStatus |

1369 |

TM1M3 |

MTM1M3.logevent_ilcWarning |

1236 |

ESS |

ESS.logevent_heartbeat |

562 |

ESS |

ESS.logevent_sensorStatus |

335 |

GCHeaderService |

GCHeaderService.logevent_heartbeat |

204 |

TM2 |

MTM2.logevent_logMessage |

188 |

GenericCamera |

GenericCamera.logevent_heartbeat |

147 |

TAirCompressor |

MTAirCompressor.logevent_heartbeat |

100 |

Electrometer |

Electrometer.logevent_heartbeat |

99 |

ScriptQueue |

ScriptQueue.logevent_heartbeat |

97 |

THexapod |

MTHexapod.logevent_heartbeat |

96 |

Scheduler |

Scheduler.logevent_heartbeat |

95 |

OCPS |

OCPS.logevent_heartbeat |

92 |

DIMM |

DIMM.logevent_heartbeat |

92 |

TM2 |

MTM2.logevent_summaryFaultsStatus |

84 |

ATPneumatics |

ATPneumatics.logevent_heartbeat |

82 |

CCCamera |

CCCamera.logevent_heartbeat |

80 |

ATMCS |

ATMCS.logevent_heartbeat |

77 |

FiberSpectrograph |

FiberSpectrograph.logevent_heartbeat |

77 |

TMount |

MTMount.logevent_clockOffset |

75 |

TVMS |

MTVMS.logevent_heartbeat |

70 |

HVAC |

HVAC.logevent_heartbeat |

69 |

CCOODS |

CCOODS.logevent_heartbeat |

66 |

TM1M3TS |

MTM1M3TS.logevent_heartbeat |

57 |

ATDomeTrajectory |

ATDomeTrajectory.logevent_heartbeat |

49 |

TDomeTrajectory |

MTDomeTrajectory.logevent_heartbeat |

49 |

ATHeaderService |

ATHeaderService.logevent_heartbeat |

49 |

WeatherForecast |

WeatherForecast.logevent_heartbeat |

49 |

LaserTracker |

LaserTracker.logevent_heartbeat |

49 |

CCHeaderService |

CCHeaderService.logevent_heartbeat |

49 |

ATMonochromator |

ATMonochromator.logevent_heartbeat |

49 |

ATSpectrograph |

ATSpectrograph.logevent_heartbeat |

49 |

ATWhiteLight |

ATWhiteLight.logevent_heartbeat |

48 |

ATCamera |

ATCamera.logevent_heartbeat |

47 |

TRotator |

MTRotator.logevent_heartbeat |

47 |

ATHexapod |

ATHexapod.logevent_heartbeat |

47 |

TMount |

MTMount.logevent_heartbeat |

46 |

Test |

Test.logevent_heartbeat |

46 |

Watcher |

Watcher.logevent_heartbeat |

46 |

ATOODS |

ATOODS.logevent_heartbeat |

45 |

ATDome |

ATDome.logevent_heartbeat |

45 |

TDome |

MTDome.logevent_heartbeat |

45 |

PMD |

PMD.logevent_heartbeat |

45 |

ATPtg |

ATPtg.logevent_heartbeat |

45 |

TPtg |

MTPtg.logevent_heartbeat |

45 |

ATAOS |

ATAOS.logevent_heartbeat |

45 |

TM2 |

MTM2.logevent_heartbeat |

45 |

TAOS |

MTAOS.logevent_heartbeat |

45 |

GIS |

GIS.logevent_heartbeat |

45 |

TM1M3 |

MTM1M3.logevent_heartbeat |

44 |

TM2 |

MTM2.logevent_m2AssemblyInPosition |

44 |

TM1M3TS |

MTM1M3TS.logevent_glycolPumpStatus |

33 |

ATHeaderService |

ATHeaderService.logevent_logMessage |

19 |